Preventing queries from running against tables that are missing statistics keeps Amazon Redshift from scanning unnecessary table rows. Identify any queries that running against tables that are missing statistics. Maintenance operationsīe sure that the database tables in your Amazon Redshift database are regularly analyzed and vacuumed. It's a best practice to use this feature, even though it increases read performance and reduces overall storage consumption. Amazon Redshift provides column encoding. High column compressionĮncode all columns (except sort key) by using the ANALYZE COMPRESSION or using the automatic table optimization feature in Amazon Redshift. If the columns are at maximum length and exceed your needs, adjust their length to the minimum size needed.įor more information about table design, review the Amazon Redshift best practices for designing tables. In the output from this query, validate if the length is appropriate for your use case. To identify and display the true widths of the wide VARCHAR table columns, run the following query: SELECT max(octet_length (rtrim(column_name))) FROM table_name To generate a list of tables with maximum column widths, run the following query: SELECT database, schema || '.' || "table" AS "table", max_varchar FROM svv_table_info WHERE max_varchar > 150 ORDER BY 2 It's a best practice to use the smallest possible column size.

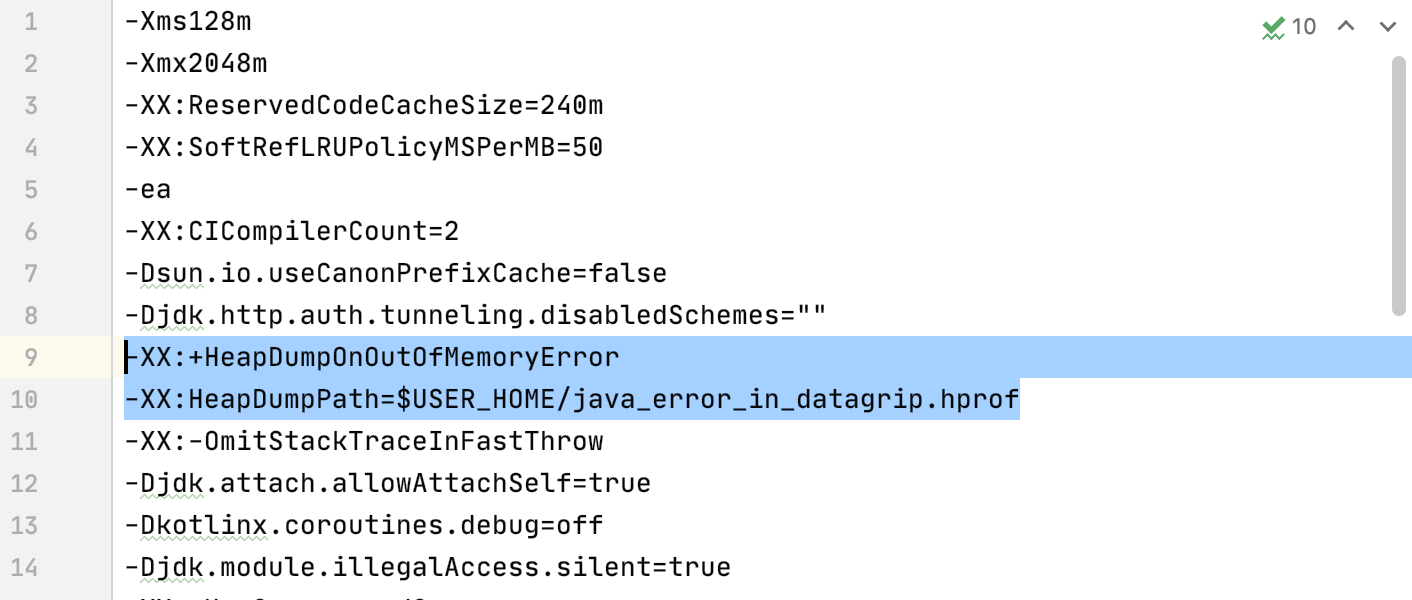

#Datagrip increase memory full#

During query processing, trailing blanks can occupy the full length in memory (the maximum value for VARCHAR is 65535). Tables with VARCHAR(MAX) columnsĬheck VARCHAR or CHARACTER VARYING columns for trailing blanks that might be omitted when data is stored on the disk. You can also use WLM query monitoring rules to counter heavy processing loads and to identify I/O intensive queries.

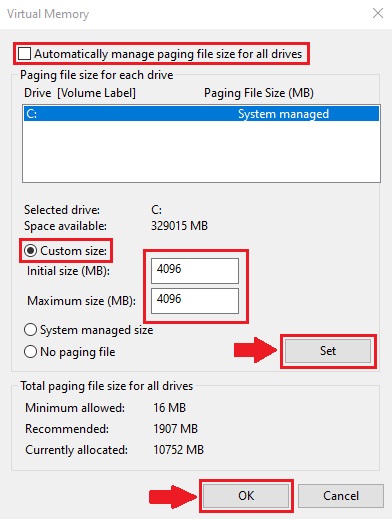

#Datagrip increase memory how to#

For more information about how to temporarily increase the slots for a query, see wlm_query_slot_count or tune your WLM to run mixed workloads. To resolve this issue, increase the number of query slots to allocate more memory to the query. If insufficient memory is allocated to your query, you might see a step in SVL_QUERY_SUMMARY where is_diskbased shows the value "true".

Follow the instructions under Tip #6: Address the inefficient use of temporary tables. For more information, see Top 10 performance tuning techniques for Amazon Redshift. But if you are using SELECT.INTO syntax, use a CREATE statement. For more information, see Insufficient memory allocated to the query.Īmazon Redshift defaults to a table structure with even distribution and no column encoding for temporary tables. Intermediate result sets aren't compressed, which affects the available disk space.

#Datagrip increase memory free#

If there isn't enough free memory, then the tables cause a disk spill. While a query is processing, intermediate query results can be stored in temporary blocks. To see how database blocks in a distribution key are mapped to a cluster, use the Amazon Redshift table_inspector.sql utility. For more information on the distribution style of your table, see Choose the best distribution style. In the filtered result set, choose a column with high cardinality to view its data distribution. For more information, see Working with sort keys. A sort step can use excessive memory, causing a disk spill. Note: To avoid a sort step, use SORT KEY columns in your ORDER BY clause. GROUP BY HAVING COUNT(*) > 1 ORDER BY 2 DESC To determine the cardinality of your distribution key, run the following query: SELECT, COUNT(*) FROM. For more information about distribution keys and sort keys, see Amazon Redshift engineering’s advanced table design playbook: preamble, prerequisites, and prioritization. Note that distribution and row skew can affect storage skew and intermediate rowset when a query is running. If you have tables with skewed distribution styles, then change the distribution style to a more uniform distribution. Tables with distribution skew-where more data is located in one node than in the others-can cause a full disk node. Review the table's distribution style, distribution key, and sort key selection. High disk usage errors can depend on several factors, including:

0 kommentar(er)

0 kommentar(er)